The latest generation of large language model (LLM) chatbots have made great strides and exhibit an impressive range of human-like abilities.

In the excitement surrounding these breakthroughs, people have imagined a future where these systems take over entire professions: drafting legal arguments, delivering medical diagnoses, managing complex engineering systems, and even making high-level policy decisions.

This has fuelled an enormous wave of interest, optimism, and in some cases, alarm. But it is important to understand that, like other artificial intelligences, they work in a completely different way to human intelligence.

The result is that although sometimes appearing human – perhaps even super-human – they can also behave strangely and fail catastrophically.

The inner workings of LLMs

A Large Language Model (LLM) can be thought of as a very powerful predictive text programme: given some text as input, the model predicts which word or words are most likely to come next.

Models like GPT-4, Gemini, and DeepSeek-R1 do this by using vast amounts of text data – billions or even trillions of words – so they can understand patterns and generate responses that are contextually relevant to the input provided.

These sophisticated models and the extensive context enable LLM-based chatbots to generate text that sounds natural in a conversation.

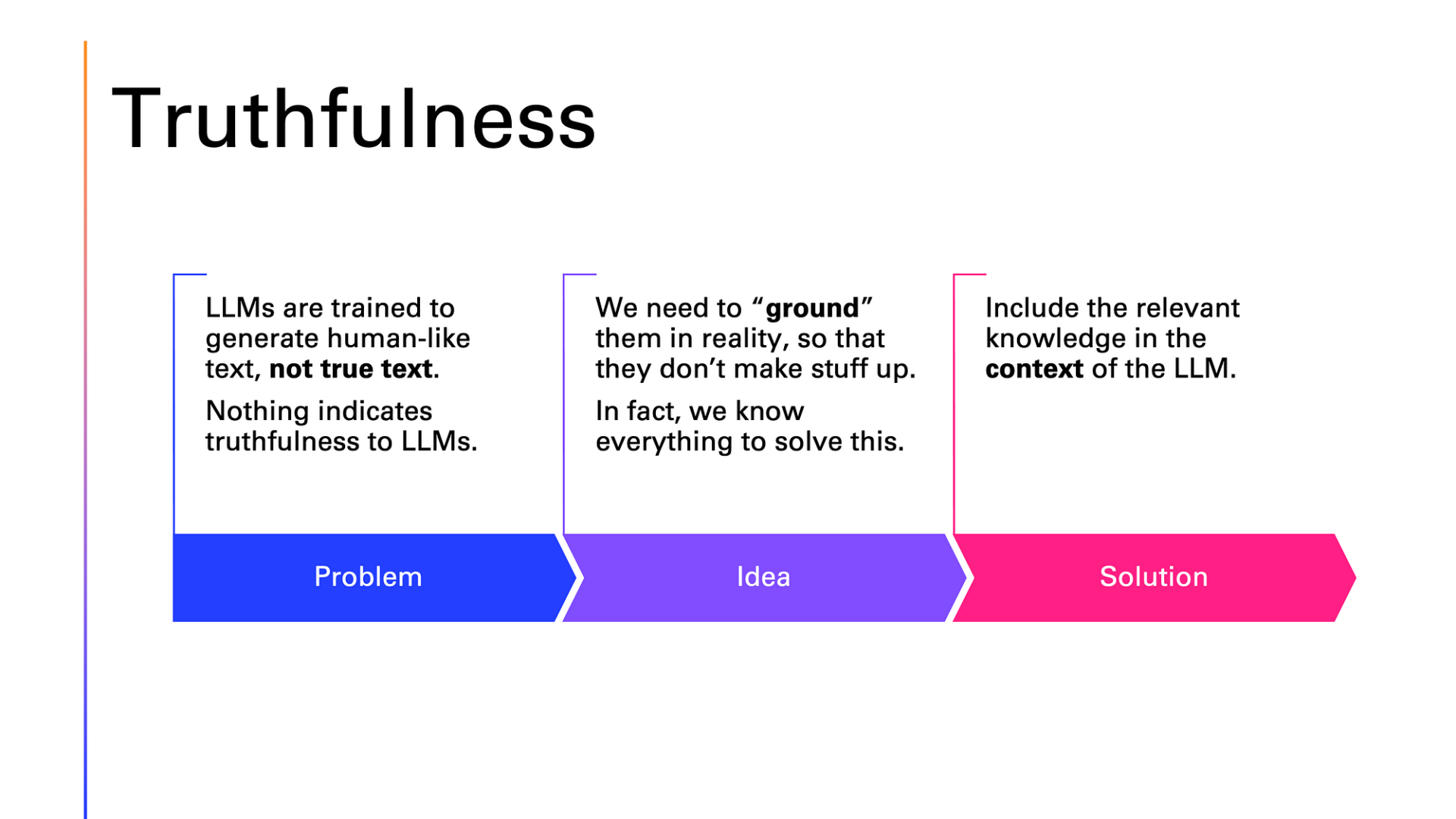

However, it is only a prediction based on a learned statistical model, and this can lead to some undesirable phenomena, including their widely reported tendency to ‘hallucinate’, i.e., to make false statements, often in a way that sounds convincing and authoritative.

Understanding AI hallucinations

Eliminating errors, or even understanding their exact source, may be difficult or impossible given the size and complexity of the underlying LLMs.

For instance, Google recently had to edit a Super Bowl advertisement for its Gemini chatbot, which falsely claimed that Gouda made up 50–60% of global cheese consumption.

Google – and Gemini itself – said that this information likely came from an unreliable source, but the model was unable to identify the specific source or explain why it ignored reputable ones claiming that Cheddar and Mozzarella are the most widely consumed cheeses.

To quote Gemini itself: “My failure to account for the widely reported popularity of mozzarella and cheddar is a significant oversight, and it underscores the weaknesses in my current information processing.”

These limitations make LLMs less useful in settings where precision matters, and where issues such as safety, transparency, fairness and regulatory compliance need to be considered – such as healthcare, engineering, journalism, or finance.

In healthcare, for example, decisions must be supported by clearly identifiable clinical research; in engineering, designs must be based on exact specifications of components, including expert knowledge about compatibility; in publishing, journalists must back their reporting with referenced sources; and in finance, complex rules and regulations must be followed. In all cases, failure can be costly and potentially dangerous.

Knowledge-based AI is needed for high-stakes sectors

The alternative to large language models is to use carefully curated expert knowledge to correctly answer complex questions – known as knowledge-based AI.

Unlike machine learning, which finds patterns in vast datasets and draws statistical outputs, knowledge-based AI aims to improve the accuracy of results by making logical and explainable decisions based on data combined with expert knowledge.

Take healthcare, for example. There’s a system called SNOMED – short for the Systematized Nomenclature of Medicine – that’s essentially a giant, structured dictionary of medical terms.

It helps systems understand relationships between things like diseases, symptoms, treatments and anatomy. If a doctor records that a patient has high blood pressure, a knowledge-based system can infer related risks (like heart disease) or flag dangerous drug interactions – not through complex statistical modelling that we have no way of testing, but by following well-established clinical knowledge.

This kind of AI is slower to build – it requires experts to carefully define what the system should know and how it should reason.

But the results are far more trustworthy. There are no hallucinations because the AI isn’t inventing anything – it’s drawing conclusions from facts that are already known and curated.

Unlocking new potential in AI

The really exciting future lies in combining the strengths of these two approaches.

LLMs can help make knowledge-based systems more user-friendly by interpreting natural language questions and helping organise expert knowledge.

Meanwhile, knowledge-based AI can act as a kind of “fact-checker” or reasoning engine behind the scenes, grounding LLMs in accuracy and reducing their tendency to make things up.

This could lead to the development of AIs that combine both general and expert knowledge with sophisticated conversational abilities and more predictable and explainable behaviour.

Ian Horrocks is professor of computer science at the University of Oxford, a fellow of the Royal Society, a BCS Lovelace Medallist, and co-founder of Oxford Semantic Technologies Limited.

The post LLMs are impressive but unreliable, we need AI built on expert knowledge appeared first on UKTN.